Principles of interactive design

March 10

Embodied Interaction

INTRODUCTION

The embodied interaction is a seminar for me and Lorenzo. It is divided into two parts, one for the presentation and one for the workshop. In the early stages of preparation, we conducted a series of readings, preparing workshops and small games for the warm-up. During the preparation of the workshop, Lorenzo and I looked for items that we could play around the warm-up game. In the course of the presentation, we explained what is embedded interaction, what it means to interaction design, and related examples.

Workshop

Warm up - Game

In this session, we designed six different scenes, including bicycles, waterfalls, boats, beaches, roller coasters and storms. The rules of this game are each group pick one scene per time and show this card to their groupmates, the groupmates can choose one way to present it. (use tools that we provided or drawing.) Each group can have 2 mins to take action or use tools to make a sound, but they can not say something. If they use tools to present it, they will get 2 points if get the right answer, drawing is only 0.5 points each round.

Design - Choose one topic to design

The second part of our workshop was a design activity in which the participants have separate 2 groups and sat at two different tables. We gave them four design prompts related to embodied interaction. Some of the design prompts revolved around specific impairments. In fact, we thought it would be interesting to take something that usually relies on one aspect of our sensory spectrum (e.g. alarm clock => hearing) and look for a way to experience it through another aspect of our corporeality.

The groups were allowed to choose one of our prompts or use them as inspiration to make up their own. We asked them to discuss possible solutions and sketch them out, and then we all came together at the same table so that they could show each other what they came up with.

The two groups ended up choosing the same design prompt, namely “how can we control music while taking a shower or swimming in a pool?”. During the process we took turns in sitting at the two tables, both to observe the process and to guide the participants in case any uncertainties arose. We had agreed on a rough time scheduling for the different sections of this activity, and we chose to adjust them during the process, balancing between the pursuit of three different - and somehow conflicting - purposes: allowing the groups to take the time they needed to go through the different phases, but still keeping them on their toes by pushing them to come up with ideas quickly, and concluding the process in the scope of time we had at our disposal.

It was interesting to see how the same prompt has been interpreted and developed in different ways by the two groups. First of all, one of the two groups focused on one of the two possibilities and chose to only design for music-listening in the shower, while the other developed ideas for both the shower and for a public swimming pool environment. Secondly, we would like to note how the proposed solutions represented three different faces of the embodied interaction field.

We will call Group 1 the group that proposed 1 solution and Group 2 the group that proposed 2 solutions. Group 1’s design was a gesture-based interface. The user could move their hands in different ways across the stream of water in the shower to play/stop the music, switch to the previous/successive song or control the volume. It is important to note that, while the action took place across the water stream, the water itself was not properly the interface, and they were planning to use some kind of sensor to detect the motions, qualifying their interface as a gesture-based one.

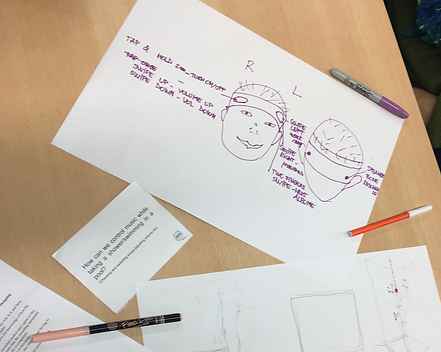

Group 2 designed a solution for the shower and one for the public pool. Their shower solution comprised of a hanging ball that could be swished or pulled down to give different commands to the music player. This interface could be very well considered a tangible interface, that used the relationship we already have with physical objects as the starting material to make our communication with computational systems easier. Finally, to allow users to control music while swimming in a public pool, Group 2 designed a wearable headband controlled with swiping motion. The headband would be wirelessly connected to a music playing device (most likely a mobile phone) and would play music through bone conduction headphones.

With the inclusion of these wearable devices, the three main poles of embodied interaction were included in the conversation generated by our design activity.

EXAMPLES

Example 1: Embodied interaction can help students to learn new things in daily life

- At the Embodied Design Research Laboratory, researchers created a game in which fifth-grade students learn the ratio by holding tennis balls in

the air. When the tennis ball is held at a ratio of 1:2, the screen turns green.

Link: https://edrl.berkeley.edu/sites/default/files /AbrahamsonTrninic2011-IDC.pdf

Example 2: Morphology extension kit: A Modular Robotic Platform for Physically Reconfigurable Wearables

-There is an intersection between embodied interaction and wearable technologies.

-The robotic platform can be used as an output device and give feedback regarding a state in the digital world (a text message is received) or the digital world (heat sensor signals a dangerous situation).

-The platform can also be used as an input device, to control a videogame or the volume of the computer speakers.

Link: https://dl.acm.org/authorize.cfm?key=N43233 (paper and video)

Example 3: Working with an Autonomous Interface: Exploring the Output Space of an Interactive Desktop Lamp

- There is an intersection between embodied interaction and physical computing of the air.

- Again, the lamp is used as an output device to give feedback about something happening in the digital world (text or email received) or in the physical world (someone’s at the door, the time has passed and you should drink water).

- It relies on social and cultural skills. When the lamp points at something we interpret it as the lamp wanting to show us something, as it happens to between people.

- We attribute intentionality and meaning to the lamp’s movement and we expect it to be coupled with an event we should pay attention to.

Example 4: Exploring Around-Device Tangible Interactions for Mobile Devices with a Magnetic Ring

- The very simple idea that is based on the context ( everyone has a mobile phone with a magnetometer).

- Brings interaction with digital technology in the physical world, relying on the movements and skills we acquire in our everyday experience.

https://dl.acm.org/authorize.cfm?key=N43356 (paper and video)

Example 5: Gestural Archeology

- Interactive virtual exploration of the archaeological site.

- The CAVE system is used to provide an immersive VR experience.

- The user interacts with the system through gestures, they can walk and move in the environment.

Link: https://www.youtube.com/watch?v=vBJIpwSHQXA (video) https://www.doc.gold.ac.uk/~mas02mg/MarcoGillies/gestur- al-archeology/ (blog post)

Example 6: The MEteor project: learning through enacted predictions of how objects move in space

- Connects the tactile device to a smartphone.

- Doesn’ t require wearing earphones: user can maintain auditory awareness of surroundings.

- Gives real-time information about the route.

- Downside: being based on maps, needs feedback from users in order to register temporary changes to routes.

Link: http://ciid.dk/education/portfolio/idp12/courses/ introduction-to-interaction-design/projects/blind-maps/ (project description, video)

Example 7: Blind Maps

- Mixed reality environment used to teach elementary school students about gravity and orbits.

- Students used bodily gestures to launch an asteroid on a target or to make it orbit around a planet.

- Compared to a control group that

used a desktop simulation, the students that used the mixed reality environment showed higher engagement, interest and better learning outcomes.

https://www.sciencedirect.com/science/article/pii/S036013151630001X (paper)

Example 8: TRANSFORM as Dynamic and Adaptive Furniture

- Tangible Media Group (MIT Media Lab).

- Shape display technology.

- Interactive, shape-changing furniture.

- Can support different physical activities.

Link: http://tangible.media.mit.edu/project/transform-as-dynamic-and-adaptive-furniture/ (video and paper)